Is MAPPO All You Need in Multi-Agent Reinforcement Learning?

Multi-agent Proximal Policy Optimization (MAPPO), a very classic multi-agent reinforcement learning algorithm, is generally considered to be the simplest yet most powerful algorithm. MAPPO utilizes global information to enhance the training efficiency of a centralized value function, whereas Independent Proximal Policy Optimization (IPPO) only uses local information to train independent value functions. In this work, we discuss the history and origins of MAPPO and discover a startling fact, MAPPO does not outperform IPPO. IPPO achieves better performance than MAPPO in complex scenarios like the StarCraft Multi-Agent Challenge (SMAC). Furthermore, the global information can also help improve the training of IPPO. In other words, IPPO with global information (called Multi-agent IPPO) is all you need.

Background

Multi-agent RL

Multi-Agent Reinforcement Learning (MARL) is a approach where multiple agents are trained using reinforcement learning algorithms within the same environment. This technique is particularly useful in complex systems such as robot swarm control, autonomous vehicle coordination, and sensor networks, where the agents interact to collectively achieve a common goal

In the multi-agent scenarios, agents typically have a limited field of view to observe their surroundings. This restricted field of view can pose challenges for agents in accessing global state information, potentially leading to biased policy updates and subpar performance. These multi-agent scenarios are generally modeled as Decentralized Partially Observable Markov Decision Processes (Dec-POMDP)

Despite the successful adaptation of numerous reinforcement learning algorithms and their variants to cooperative scenarios in the MARL setting, their performance often leaves room for improvement. A significant challenge is the issue of non-stationarity

From PPO to Multi-agent PPO

Proximal Policy Optimization (PPO)

Independent PPO (IPPO)

Each agent $i$:

- Interacts with the environment and collects its own set of trajectories $\tau_i$

- Estimates the advantages \(\hat{A}_i\) and value function \(V_i\) using only its own experiences

- Optimizes its parameterized policy $\pi_{\theta_i}$ by minimizing the PPO loss:

Where for each agent $i$, at timestep $t$:

$\theta_i$: parameters of the agent $i$

\(r_t^{\theta_i}=\frac{\pi_\theta(a_t^i|o_t^i)}{\pi_{\theta_{\text{old}}}(a_t^i|o_t^i)}\): probability ratio between the new policies $\pi_\theta_i$ and old policies $\pi_{\theta_\text{old}}^i$, $a^i$ is action, $o^i$ is observation of the agent $i$

\(\hat{A}_t^i=r_t + \gamma V^{\theta_i}(o_{t+1}^i) - V^{\theta_i}(o_t^i)\): estimator of the advantage function \(V^{\theta_i}(o_t^i) = \mathbb{E}[r_{t + 1} + \gamma r_{t+2} + \gamma^2 r_{t+3} + \dots|o_t^i]\): value function

$\epsilon$: clipping parameter to constrain the step size of policy updates

This objective function considers both the policy improvement $r_t^{\theta_i}\hat{A}^i_t$ and update magnitude $\textrm{clip}(r_t(\theta), 1−\epsilon, 1+\epsilon)\hat{A}_t$. It encourages the policy to move in the direction that improves rewards, while avoiding excessively large update steps. Therefore, by limiting the distance between the new and old policies, IPPO enables stable and efficient policy updates. This process repeats until convergence.

While simple, this approach means each agent views the environment and other agents as part of the dynamics. This can introduce non-stationarity that harms convergence. So while IPPO successfully extends PPO to multi-agent domains, algorithms like MAPPO tend to achieve better performance by accounting for multi-agent effects during training. Still, IPPO is an easy decentralized baseline for MARL experiments.

Multi-Agent PPO (MAPPO)

During value function training, MAPPO agents have access to global information about the environment. The shared value function can further improve training stability compared to Independent PPO learners and alleviate non-stationarity, i.e.,

\(\hat{A}_t=r_t + \gamma V^{\phi}(s_{t+1}^i) - V^{\phi}(s_t)\): estimator of the shared advantage function \(V^{\phi}(s_t) = \mathbb{E}[r_{t + 1} + \gamma r_{t+2} + \gamma^2 r_{t+3} + \dots|s_t]\): the shared value function

But during execution, agents only use their own policy, likewise with IPPO.

MAPPO-FP

where $f^i$ including the features of agent $i$ or the observation from agent $i$.

Noisy-MAPPO:

Then the policy gradient is computed using the noisy advantage values $A^{\pi}_i$ calculated with the noisy value function $V_i(s)$. This noise regularization prevents policies from overfitting to biased estimated gradients, improving stability.

MAPPO is often regarded as the simplest yet most powerful algorithm due to its use of global information to boost the training efficiency of a centralized value function. While IPPO employs local information to train independent value functions.

Enviroment

We use the StarCraft Multi-Agent Challenge (SMAC)

The key aspects of SMAC are:

- Complex partially observable Markov game environment, with challenges like sparse rewards, imperfect information, micro control, etc.

- Designed specifically to test multi-agent collaboration and coordination.

- Maps of different difficulties and complexities to evaluate performance, such as the super hard map

3s5z_vs_3s6zand the easy map3m.

Code-level Analysis

In order to thoroughly investigate the actual changes from PPO to MAPPO and Noisy-MAPPO, we delved deeply into their differences at the code level with SMAC. Fundamentally, their main difference lies in the modeling of the value function during the training stage.

Independent PPO (IPPO)

For the input of the policy function and value function, IPPO uses the get_obs_agent function to obtain the environmental information that each agent can see. The core code here is the dist < sight_range, which is used to filter out information that is outside the current agent’s field of view, simulating an environment with local observation. agent_id_feats[agent_id] = 1 is used to set the one-hot agent identification (ID).

def get_obs_agent(self, agent_id):

...

move_feats = np.zeros(move_feats_dim, dtype=np.float32)

enemy_feats = np.zeros(enemy_feats_dim, dtype=np.float32)

ally_feats = np.zeros(ally_feats_dim, dtype=np.float32)

own_feats = np.zeros(own_feats_dim, dtype=np.float32)

agent_id_feats = np.zeros(self.n_agents, dtype=np.float32)

...

# Enemy features

for e_id, e_unit in self.enemies.items():

e_x = e_unit.pos.x

e_y = e_unit.pos.y

dist = self.distance(x, y, e_x, e_y)

if (dist < sight_range and e_unit.health > 0): # visible and alive

# Sight range > shoot range

enemy_feats[e_id, 0] = avail_actions[self.n_actions_no_attack + e_id] # available

...

# One-hot agent_id

agent_id_feats[agent_id] = 1.

agent_obs = np.concatenate((ally_feats.flatten(),

enemy_feats.flatten(),

move_feats.flatten(),

own_feats.flatten(),

agent_id_feats.flatten()))

...

return agent_obsMulti-Agent PPO (MAPPO)

For the input of the value function, MAPPO removes dist < sight_range to retain global information of all agents. This means MAPPO only has one shared value function, because there is only one state input into the value function. The input of the policy function in MAPPO is the same as in IPPO.

def get_state(self, agent_id=-1):

...

ally_state = np.zeros((self.n_agents, nf_al), dtype=np.float32)

enemy_state = np.zeros((self.n_enemies, nf_en), dtype=np.float32)

move_state = np.zeros((1, nf_mv), dtype=np.float32)

# Enemy features

for e_id, e_unit in self.enemies.items():

if e_unit.health > 0:

e_x = e_unit.pos.x

e_y = e_unit.pos.y

dist = self.distance(x, y, e_x, e_y)

enemy_state[e_id, 0] = (e_unit.health / e_unit.health_max) # health

...

state = np.append(ally_state.flatten(), enemy_state.flatten())

...

return state

MAPPO-FP

For the input of the value function, MAPPO-FP concatenates own_feats (including agent ID, position, last action and others) of the current agent with global information. The input of the policy function in MAPPO-FP is the same as in IPPO.

def get_state_agent(self, agent_id):

...

move_feats = np.zeros(move_feats_dim, dtype=np.float32)

enemy_feats = np.zeros(enemy_feats_dim, dtype=np.float32)

ally_feats = np.zeros(ally_feats_dim, dtype=np.float32)

own_feats = np.zeros(own_feats_dim, dtype=np.float32)

agent_id_feats = np.zeros(self.n_agents, dtype=np.float32)

...

# Enemy features

for e_id, e_unit in self.enemies.items():

e_x = e_unit.pos.x

e_y = e_unit.pos.y

dist = self.distance(x, y, e_x, e_y)

if e_unit.health > 0: # visible and alive

# Sight range > shoot range

if unit.health > 0:

enemy_feats[e_id, 0] = avail_actions[self.n_actions_no_attack + e_id] # available

...

# Own features

ind = 0

own_feats[0] = 1 # visible

own_feats[1] = 0 # distance

own_feats[2] = 0 # X

own_feats[3] = 0 # Y

ind = 4

...

if self.state_last_action:

own_feats[ind:] = self.last_action[agent_id]

state = np.concatenate((ally_feats.flatten(),

enemy_feats.flatten(),

move_feats.flatten(),

own_feats.flatten()))

return stateNoisy-MAPPO

For the input of the value function, Noisy-MAPPO concatenate a fixed noise vector with global information. We found in the code that this noise vector does not need to be changed after being well initialized. The input of the policy function Noisy-MAPPO is the same as the IPPO.

class R_Critic(nn.Module):

...

def forward(self, cent_obs, rnn_states, masks, noise_vector=None):

...

# global states

cent_obs = check(cent_obs).to(**self.tpdv)

rnn_states = check(rnn_states).to(**self.tpdv)

masks = check(masks).to(**self.tpdv)

if self.args.use_value_noise:

N = self.args.num_agents

# fixed noise vector for each agent

noise_vector = check(noise_vector).to(**self.tpdv)

noise_vector = noise_vector.repeat(cent_obs.shape[0] // N, 1)

cent_obs = torch.cat((cent_obs, noise_vector), dim=-1)

critic_features = self.base(cent_obs)

...

values = self.v_out(critic_features)

return values, rnn_states

Based on code-level analysis, both MAPPO-FP and Noisy-MAPPO can be viewed as instances of IPPO, where the fixed noise vector in Noisy-MAPPO is equivalent to a Gaussian distributed agent_id, while MAPPO-FP is simply IPPO with supplementary global information appended to the input of the value function. Their common characteristic is that each agent has an independent value function with global information, i.e., IPPO with global information. And during the training phase, each agent only uses its own value function to calculate the advantage value and loss, be equivalent to IPPO. We refer to this type of algorithm as Multi-agent IPPO (MAIPPO).

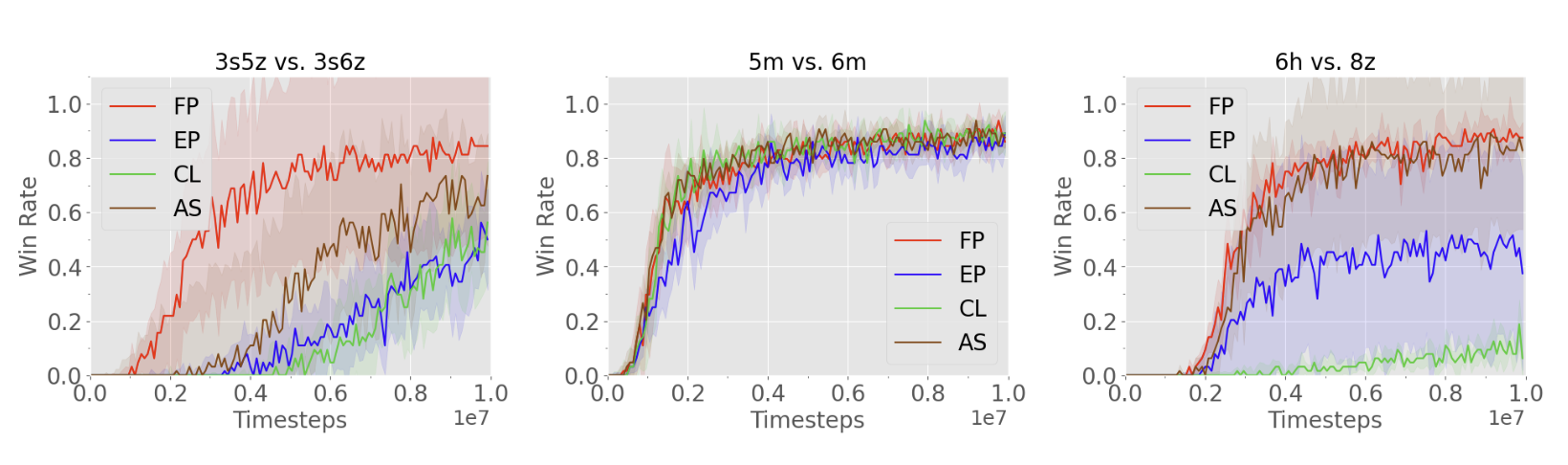

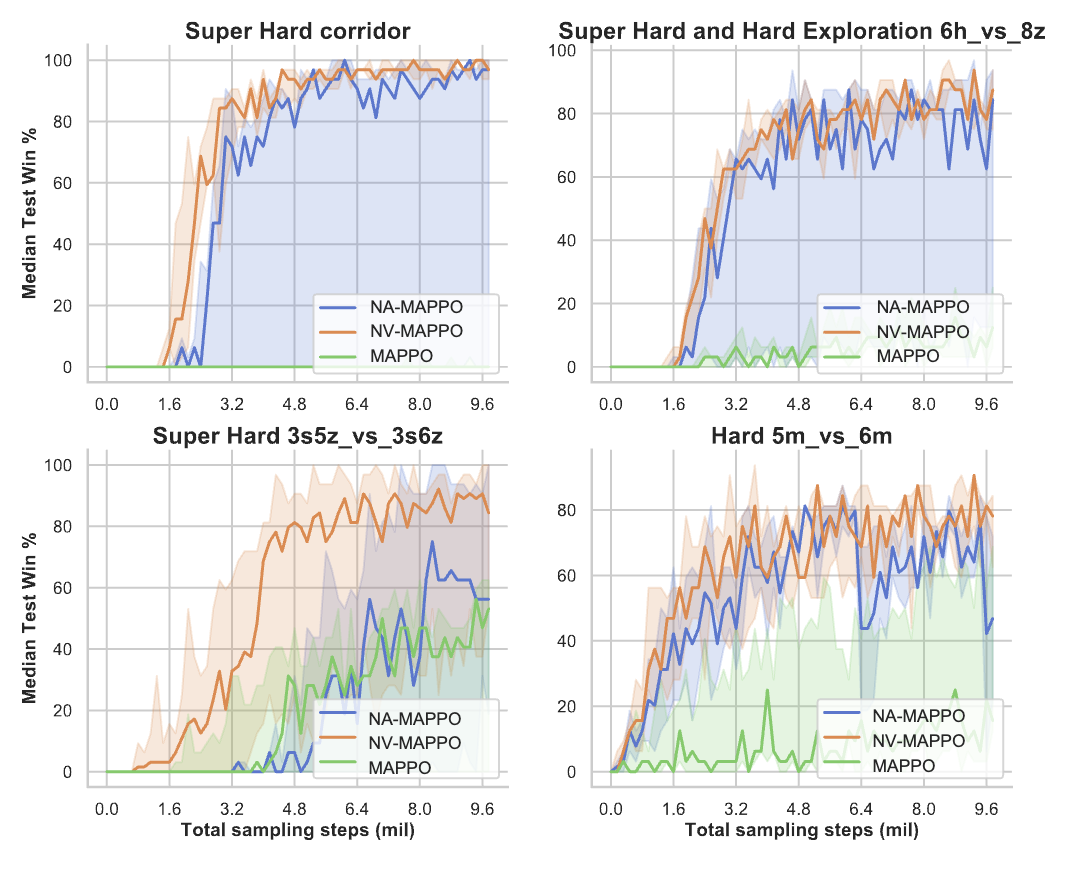

Experiments

We then reproduced some of the experimental results from IPPO, MAPPO, and Noisy-MAPPO using their open-sourced code (IPPO,MAPPO,MAPPO-FP, Noisy-MAPPO),

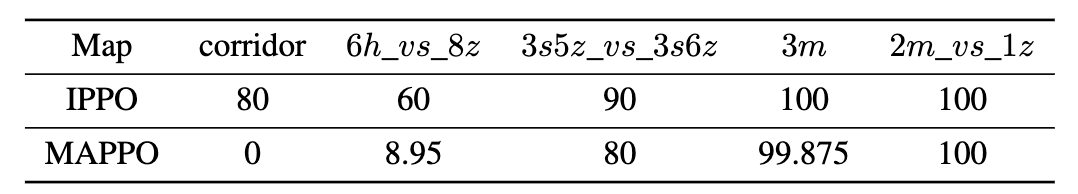

| Algorithms | 3s5z_vs_3s6z | 5m_vs_6m | corridor | 27m_vs_30m | MMM2 |

|---|---|---|---|---|---|

| MAPPO | 53% | 26% | 3% | 95% | 93% |

| IPPO | 84% | 88% | 98% | 75% | 87% |

| MAPPO-FP | 85% | 89% | 100% | 94% | 90% |

| Noisy-MAPPO | 87% | 89% | 100% | 100% | 96% |

We also cite the experimental results from these papers themselves below,

From the experimental results, we can see that

- The centralized value function of MAPPO does not provide effective performance improvements. The independent value functions for each agent make the multi-agent learning more robust.

- Introducing global information into the value function improves the learning efficiency of IPPO.

Discussion

In this blog post, we take a deeper look at the relationship between MAPPO and IPPO from the perspective of code and experiments. Our conclusion is: IPPO with global information (Multi-agent IPPO) is all you need. According to the principle of CTDE, the centralized value function in MAPPO should be easier to learn than IPPO and unbiased. Then why is IPPO, better than paradigms like MAPPO, more useful?

Furthermore, we continue to discuss the different implementations of IPPO with global information in MAPPO-FP and Noisy-MAPPO. MAPPO-FP utilizes an agent’s own features, including the agent ID, position, last action and others, to form independent value functions. In contrast, Noisy-MAPPO just only uses agent ID based on Gaussian noise. Essentially, they both aim to form a distinct set of value functions.

Therefore, there are several reasons for employing independent value functions over a centralized value function:

- The independent value functions increase policy diversity and improve exploration capabilities.

- The independent value functions constitute ensemble learning

, making the PPO algorithm more robust in unstable multi-agent environments. - Each agent having its own value function can be seen as an implicit credit assignment

.

With this blog post, we aim to broaden awareness for the MAIPPO class of algorithms, beyond the well-known MAPPO method alone. We believe the full MAIPPO family holds untapped potential which deserves exploration.