Multi-modal Learning: A Look Back and the Road Ahead

Advancements in language models has spurred an increasing interest in multi-modal AI — models that process and understand information across multiple forms of data, such as text, images and audio. While the goal is to emulate human-like ability to handle diverse information, a key question is: do human-defined modalities align with machine perception? If not, how does this misalignment affect AI performance? In this blog, we examine these questions by reflecting on the progress made by the community in developing multi-modal benchmarks and architectures, highlighting their limitations. By reevaluating our definitions and assumptions, we propose ways to better handle multi-modal data by building models that analyze and combine modality contributions both independently and jointly with other modalities.

Introduction

Humans constantly use multiple senses to interact with the world around us. We use vision to see, olfaction to smell, audition to hear, and we communicate through speech. Similarly, with recent multi-modal artificial intelligence (AI) advancements, we now see articles announcing “ChatGPT can see, hear and speak”. But there’s a fundamental question underlying this progress:

To unpack this question, we show examples that illustrate the basic ambiguity of defining modalities for machine learning.

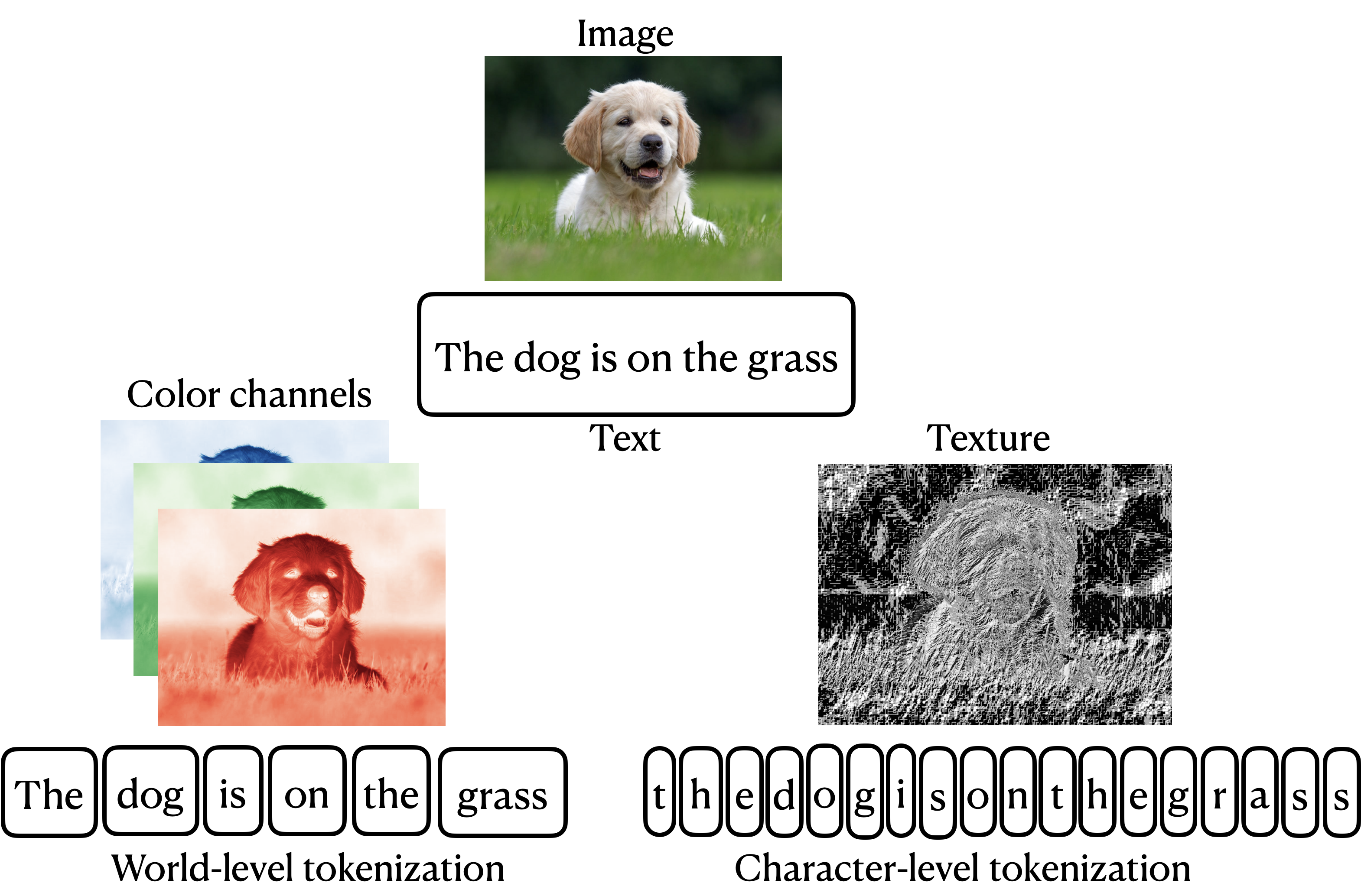

Example 1: Images

Images are commonly considered as a single modality for both humans and AI. For humans, an image is a unified representation of visual information. However, machine learning models can perceive images in various ways :

- Color channels: RGB images comprise of Red, Green, and Blue (R, G, B) channels, each of which could be viewed as a separate component or modality.

- Derived Representations: We can generate secondary images highlighting specific features

, such as material properties like shininess or texture. - Image Patches: The image can be divided into smaller sections or patches of different sizes

, capturing different parts of the image.

These decompositions raise an important question:

Example 2: Text

Text is commonly treated as a single modality in language models, however, the method of tokenization—how text is broken into atomic units—can vary widely:

- Word-Level Tokenization: Sentences are split into individual words. For example, the sentence “The dog is on the grass” would be tokenized as (

thedogisonthegrass) - Character-Level Tokenization: Each individual character is treated as a token, turning the same sentence into (

thedogisonthegrass)

This leads us to ask:

Although the term “multi-view” is sometimes used to describe these variations, the line between “multi-view” and “multi-modal” is often blurred, adding to the confusion. This is an important question because often different tokenization strategies perform differently on different tasks

Example 3: Medical data

Consider diagnosing skin lesion using both image data and tabular features, such as patient’s age, demographic information and characteristics of the lesion along with the anatomical region. Each modality independently and jointly contributes to detecting lesion

Why is modality grouping information important?

The definition and grouping of modalities is important because it affects how we design models to process and integrate different types of data. Often the objective of prior studies is to ensure that models capture interactions between all mdoalities for the downstream task. This goal has led to two parallel lines of work. One approach focuses on developing new benchmarks to capture this interaction. These benchmarks often exhibit uni-modal biases, leading to subsequent iterations or new benchmarks intended to better evaluate multi-modal capabilities. The other approach emphasizes building complex architectures designed to learn interactions between modalities more effectively.

In this blog post, we examine the community’s progress in both of these directions and why they have fallen short of meaningful impact. We then propose ways to analyze and capture the relative importance of individual modalities and their interaction for the downstream task.

Reflection on the Progress so Far

Over the past decade, numerous multi-modal benchmarks and model architectures have been proposed to evaluate and enhance the multi-modal learning capabilities of AI models. Yet, we continue to struggle with making meaningful progress due to benchmarks not accurately representing real-world scenarios and models failing to capture the essential multi-modal dependencies effectively. We reflect on the progress made in these two areas and discuss why these approaches have not been sufficient in obtaining the desired results.

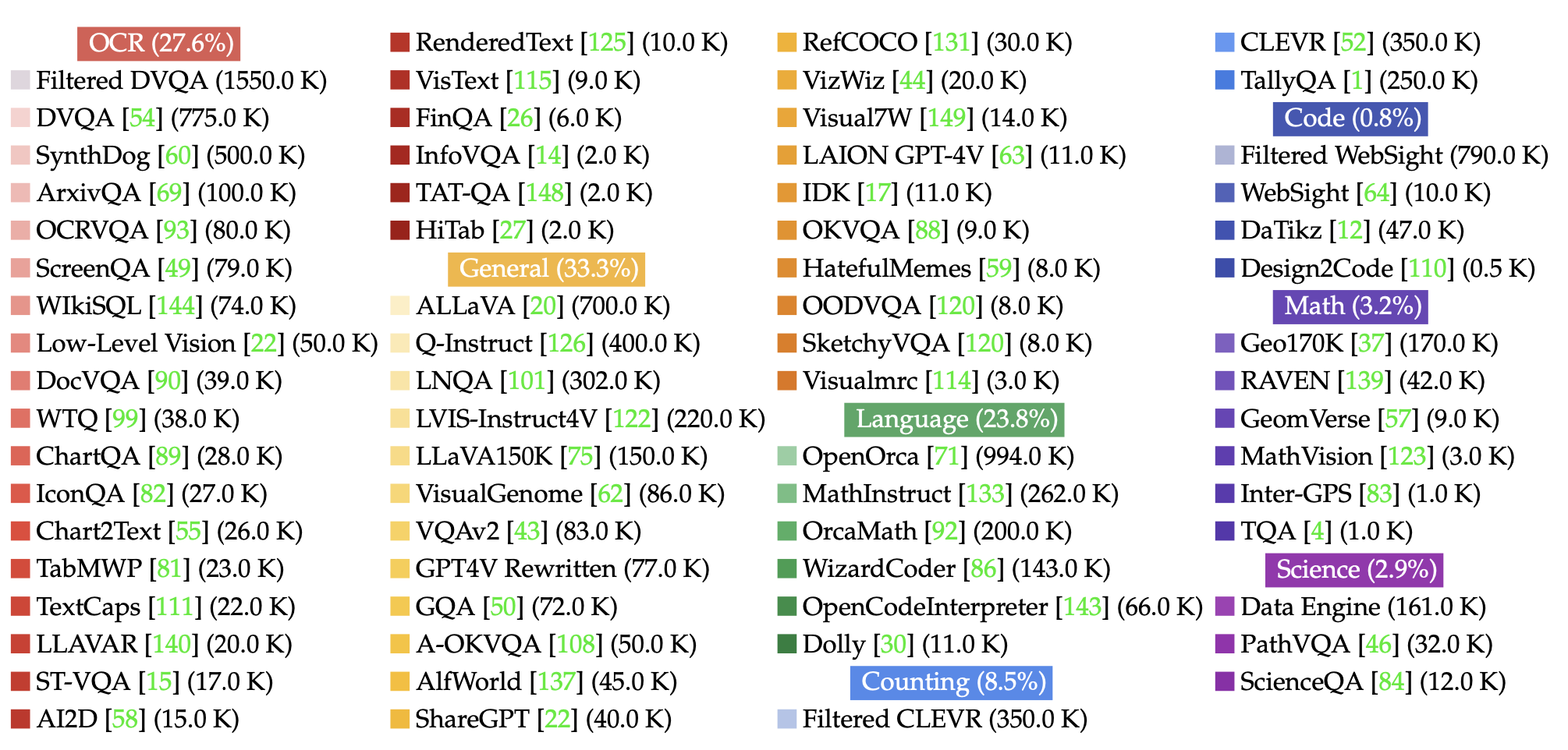

Are benchmarks enough?

Numerous benchmarks have been developed for multi-modal learning (see Figure above). Many were initially designed to evaluate whether models could effectively capture interactions between modalities for downstream tasks. The early benchmarks often exhibited a reliance on uni-modal biases, allowing models to perform well even when ignoring certain modalities

For instance, consider visual question answering (VQA)

- VQAv2 (2016)

: Attempted to mitigate language bias by providing distinct answers for two different image-question pairs. Despite the subsequent updates, VQAv2 continues to be a benchmark in research and evaluations for recent models like Gemini , ChatGPT and LLaVA . - VQA-CP (2018)

: Adjusted answer distributions between training and test sets to reduce prior biases. - VQA-CE (2021)

: Emphasized multi-modal spurious dependencies among image, text, and answer to better capture multi-modal interactions. - VQA-VS (2022)

: Broadened the scope to explore both uni-modal and multi-modal shortcuts in a more comprehensive manner.

Even after a decade of refinements, VQA benchmarks continue to grapple with biases and limitations, raising concerns about the direction of constructing more and more benchmarks. While many benchmarks aim to evaluate different capabilities of models, they often result in only incremental improvements. Similar challenges are evident in non-VQA benchmarks such as Natural Language Visual Reasoning (NLVR)

These examples highlight a critical limitation:

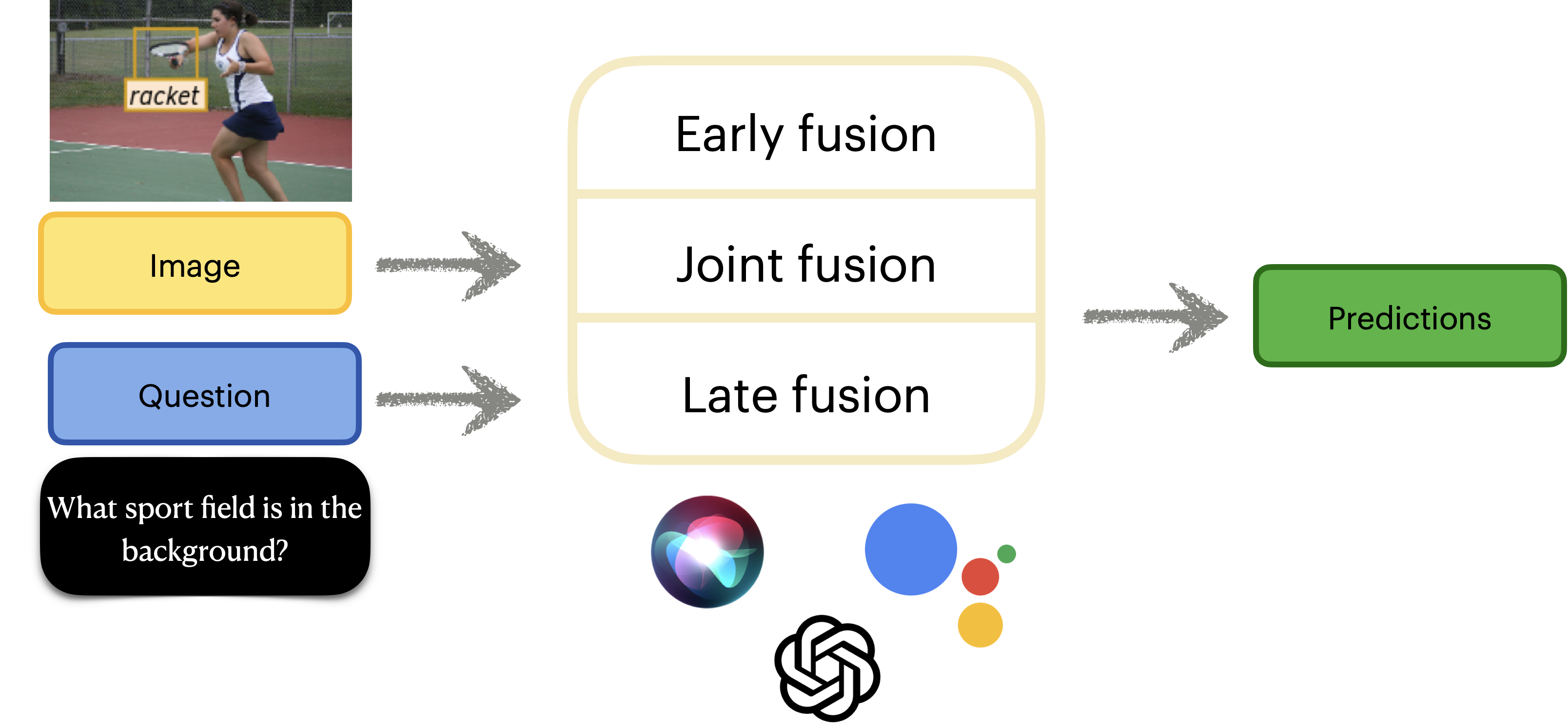

Are complex multi-modal architectures enough?

In the task of VQA, as shown in the Figure above, many methods would process the input and text modalities to have same dimensions and then use one of the many conventional “multi-modal” algorithms:

- Early fusion: These methods concatenate modality features followed by joint processing of features. This involves using a unified encoder with shared parameters across all modalities. This approach is common in early multi-modal learning based methods

to recent transformer based methods . - Intermediate fusion

: These methods fuse specific layers, rather than sharing all the parameters. - Late fusion

: Thse methods learn separate encoder representations for each modality followed by fusion. The fusion often uses additional layers to capture the interaction between these modalities on top of the individual representations.

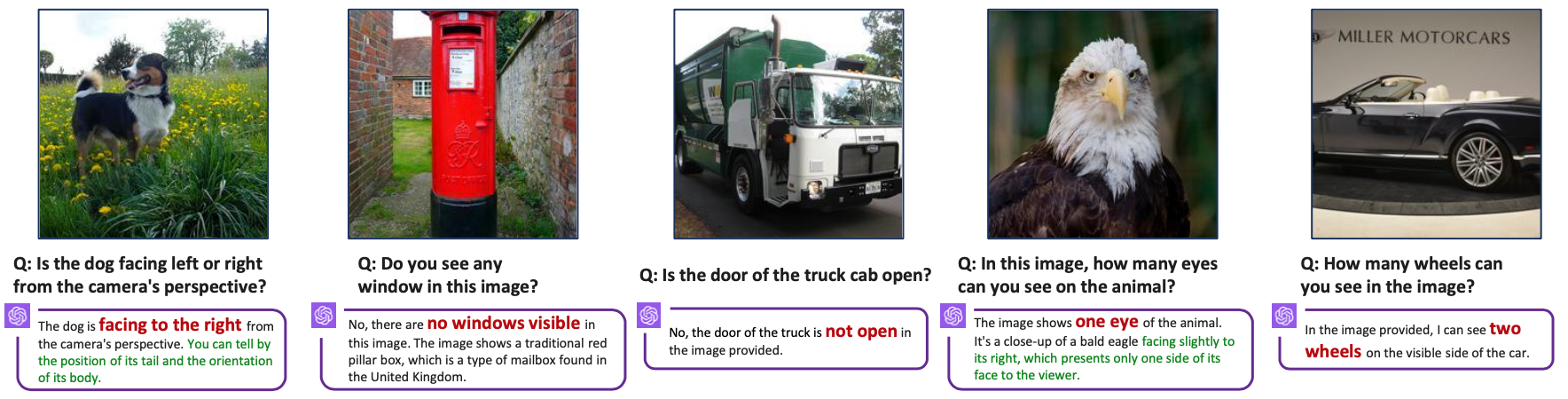

Despite a decade of advancements in developing these complex architectures, many multi-modal models continue to struggle with effectively integrating vision and text, often disregarding one modality in the process (see Figure above). For example, while humans consistently achieve around 95% accuracy on VQA, recent AI models such as GPT-4V and Gemini only reach about 40%, with others like LLaVA-1.5, Bing Chat, mini-GPT4, and Bard performing even worse—sometimes falling below random performance levels

Some studies

Towards Meaningful Progress in Multi-modal Learning

To drive meaningful progress in multi-modal learning, we need to move away from simply creating more benchmarks or building increasingly complex architectures. While these efforts have advanced the field incrementally, they haven’t tackled its fundamental challenges. Instead, we propose approaching the field from a two stage perspective below:

Analysis of the strengths of individual and combination of modalities

For any dataset or benchmark, we recommend to start with the assumption of what constitutes a modality in the context of the desired task. This definition should not be limited to human-perceived notions of modality but should critically evaluate and challenge these assumptions. The goal however is not merely to label an input as multi-modal; rather, it is to assess whether such labeling provides meaningful advantages for model performance or understanding.

To answer that question, we recommend a thorough examination of the dependencies for each defined modality, both individually and jointly with other modalities for the target task. These dependencies are categorized as intra-modality dependencies, which represent interactions between individual modalities and the target label and inter-modality dependencies, which captures interaction between modalities and label.

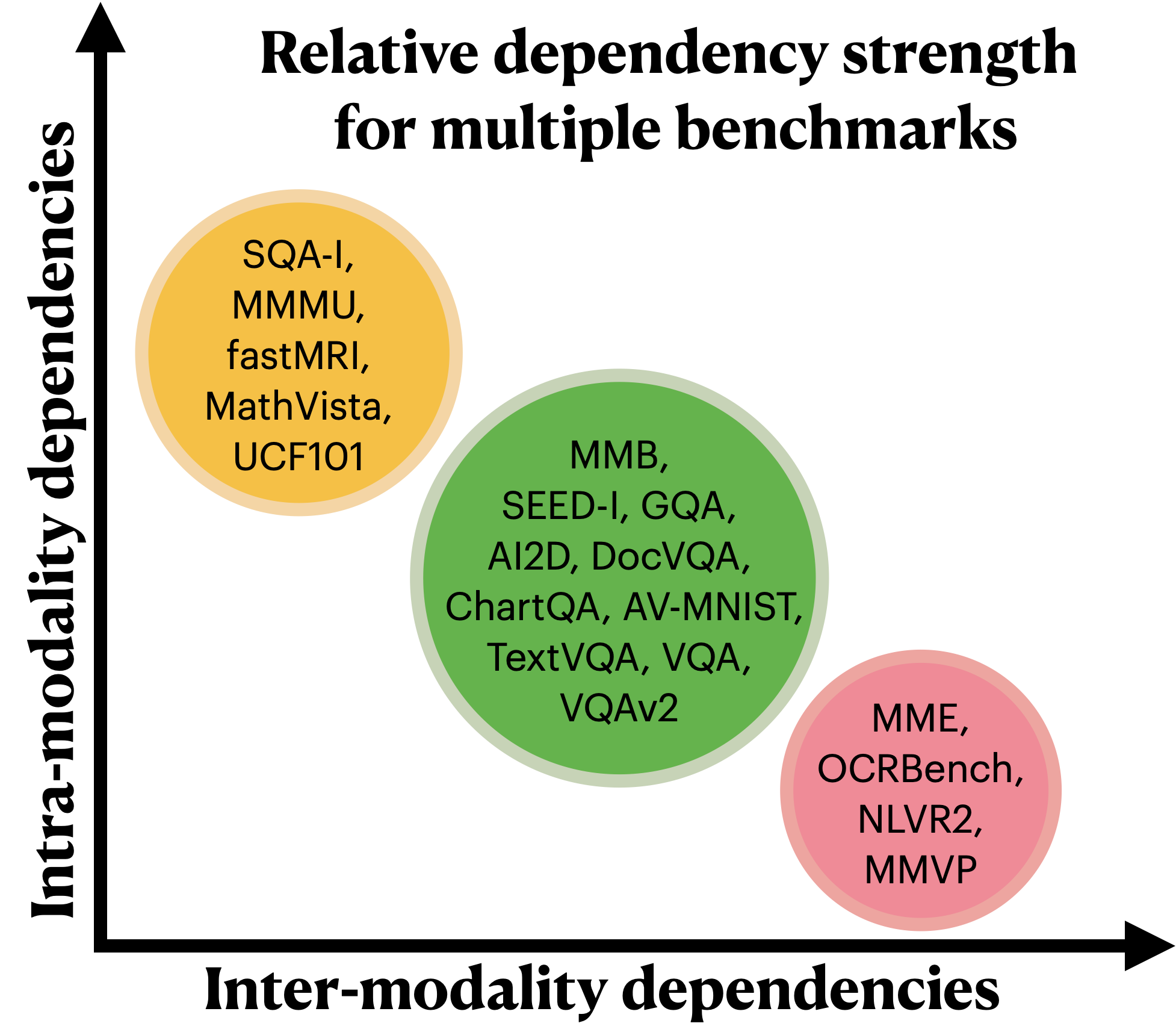

Several studies have evaluated benchmarks involving images and text as two modalities, and we illustrate how these dependencies differed across benchmarks based on prior studies

For all these benchmarks, specific architectural choices—such as the type of fusion method or the backbone architecture for vision and language models—exhibit minimal impact on performance, as noted in prior studies

This analysis provides a better understanding of the importance of each modality and their interaction in the corresponding dataset. Such understanding enables us to prioritize and weight these contributions when constructing multi-modal models, as elaborated in the next section.

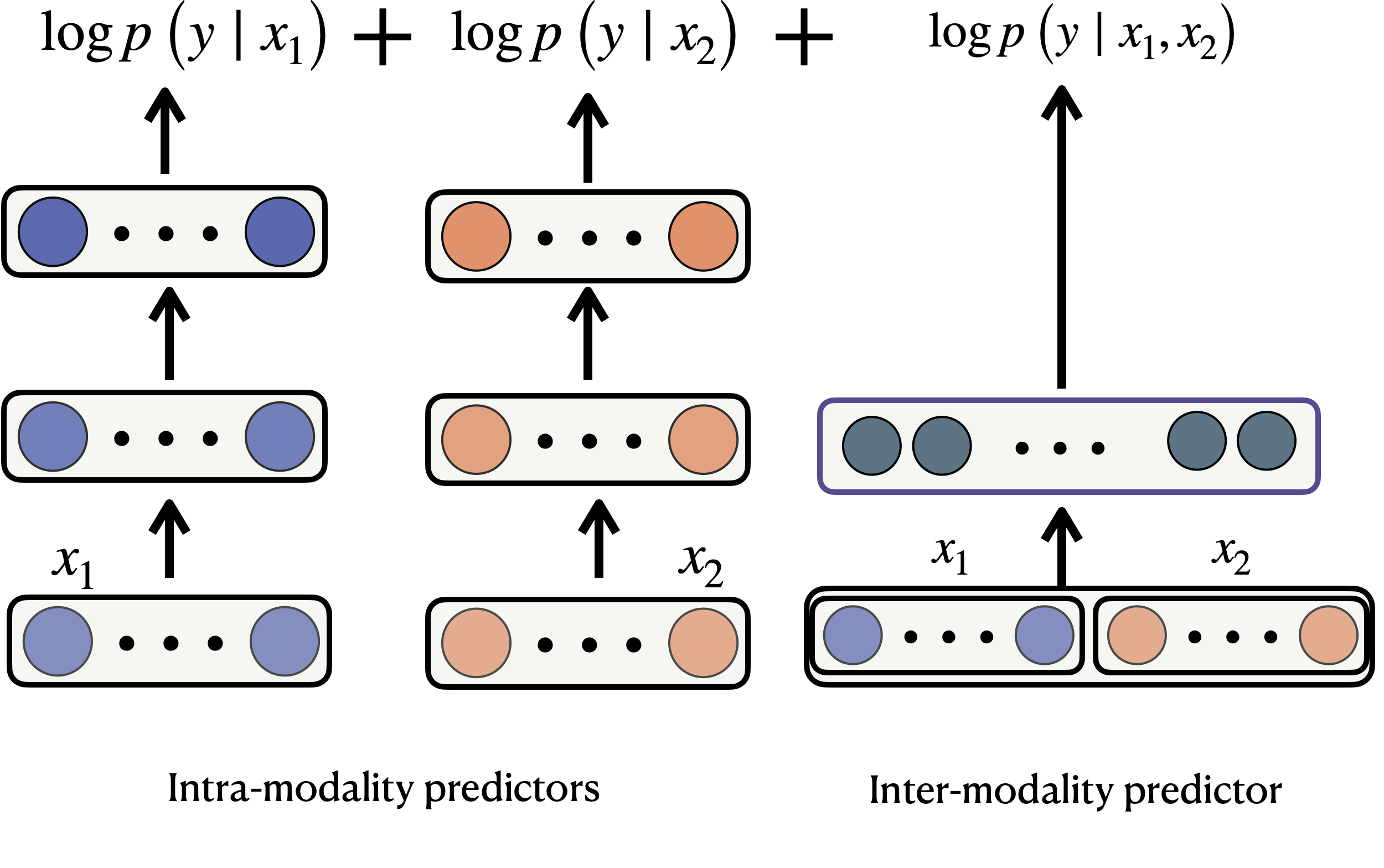

Building better multi-modal models

With a clear understanding of each modality’s contribution, we can prioritize the dependencies that are the most important, or simply build a “product of experts” approach to combine these dependencies (see Figure above). Particularly, for two modalities \(\{x_1, x_2\}\) and label $y$, the output can be expressed as the product of two sets of models: one capturing the importance of individual modalities for the label (intra-modality predictors) and the other focusing on the importance of their interactions for the label (inter-modality predictor) as follows:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

def forward(x_1, x_2):

"""

Forward pass for multi-modal classification using Product of Experts (PoE).

Combines predictions from intra-modality models and inter-modality model.

Args:

x_1 (Tensor): Input from modality 1

x_2 (Tensor): Input from modality 2

Returns:

Tensor: Log-probabilities over output classes, shape [batch_size, num_classes]

"""

# Intra-modality predictors (separate models for each modality)

intra_output_1 = self.intra_model_1(x_1)

intra_output_2 = self.intra_model_2(x_2)

# Inter-modality predictor (fusion model over both modalities)

inter_input = torch.cat([x_1, x_2], dim=-1)

inter_output = self.inter_model(inter_input)

# Convert outputs to log-probabilities

log_probs_intra_1 = torch.log_softmax(intra_output_1, dim=-1)

log_probs_intra_2 = torch.log_softmax(intra_output_2, dim=-1)

log_probs_inter = torch.log_softmax(inter_output, dim=-1)

# Product of Experts: add log-probabilities from each expert

combined_log_probs = log_probs_intra_1 + log_probs_intra_2 + log_probs_inter

# Normalize

log_normalizer = torch.logsumexp(combined_log_probs, dim=-1, keepdim=True)

log_probs = combined_log_probs - log_normalizer

return log_probs

The code above combines the output log probabilities in an additive way and has been shown to work effectively across various healthcare, language, and vision benchmarks, even when the relative strength of these dependencies is not known

This comes with the trade-off of increased parameter requirements, which could impact efficiency. We believe future research should focus on optimizing this framework to reduce its computational cost. Progress in this direction is important, as current trends often attempt to address these challenges by either expanding datasets or increasing architectural complexity as highlighted above—approaches that have not led us to efficient or scalable solutions.

Takeaway

Current approaches to multimodal learning tend to overemphasize the interaction between modalities for downstream tasks, resulting in benchmarks and architectures narrowly focused on modeling these interactions. In real-world scenarios, however, the strength of these interactions are often unkown. To build more effective multimodal models, we need to shift our focus toward holistically understandinging the independent contributions of each modality as well as their joint impact on the target task.

Acknowledgement

This work was supported by the Institute of Information & Communications Technology Planning & Evaluation (IITP) with a grant funded by the Ministry of Science and ICT (MSIT) of the Republic of Korea in connection with the Global AI Frontier Lab International Collaborative Research, Samsung Advanced Institute of Technology (under the project Next Generation Deep Learning: From Pattern Recognition to AI), National Science Foundation (NSF) award No. 1922658, Center for Advanced Imaging Innovation and Research (CAI2R), National Center for Biomedical Imaging and Bioengineering operated by NYU Langone Health, and National Institute of Biomedical Imaging and Bioengineering through award number P41EB017183.