Demystifying Vectorfield Modeling in Generative Models

One of the most popular technics in recent years when it comes to generative models, have been methods that rely on modeling vectorfields of data transformations in various steps. One of the most recent and widely adpopted methods of this kind, is flow matching. This blog post focuses on the concept of vectorfields, vectorfield modeling via neural network, and its usecase in the flow matching framework.

Introduction

In the recent years, generative models have been relying on a new class of models that learn to map a noise distribution to a target distribution such as images, given a set of data samples thrtough a multi-step denoising process. Some of the well-known methods are diffusion models

What is a Vectorfield

A vector field is a mathematical construct that assigns a vector to every point in a space. It is often used to represent the distribution of a vector quantity, such as velocity, force, or magnetic field, across a region.

Now let’s take a closer look at some of these examples.

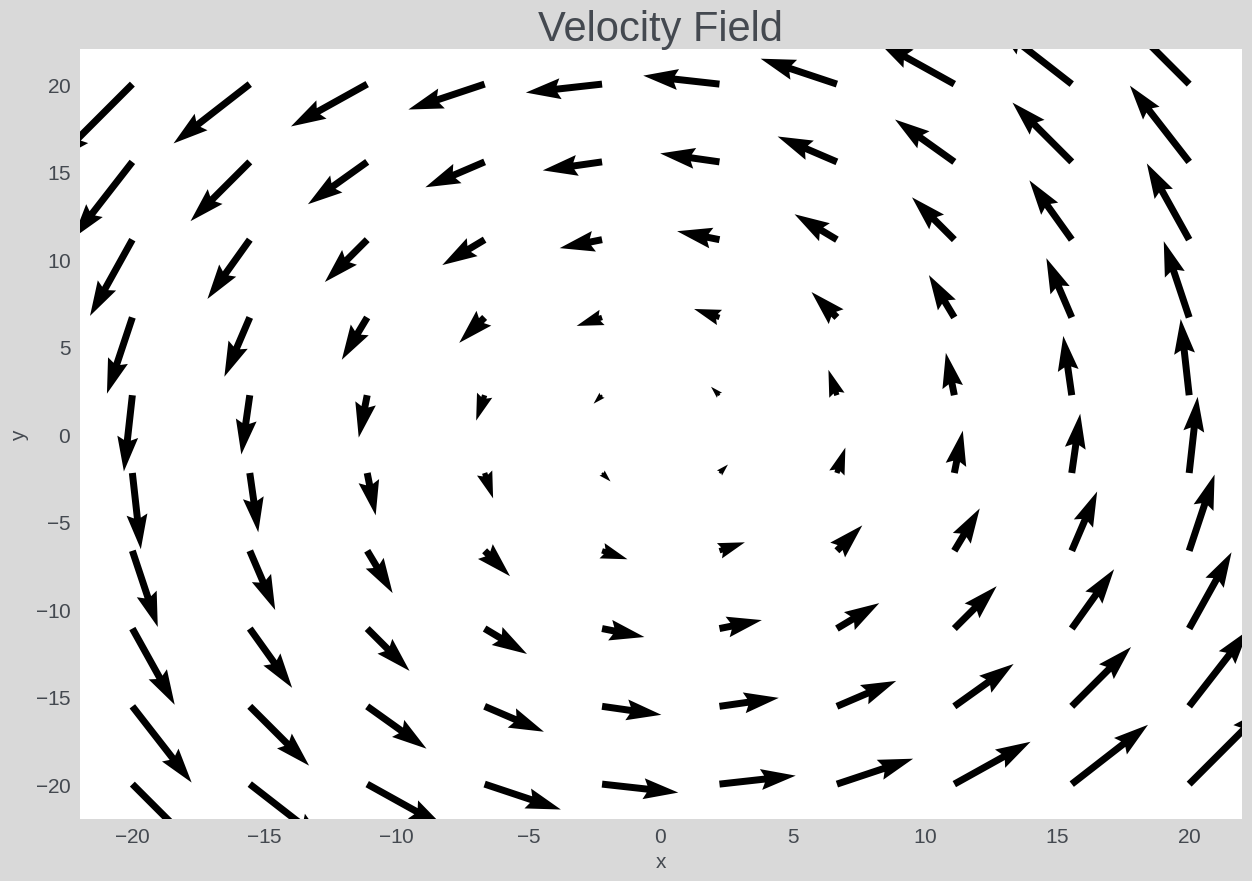

Velocity Field

The following is an example of a velocity field, representing a rotational flow, often called a vortex:

\[\mathbf{v}(x, y) = \langle -y, x \rangle\]The x-component of velocity (\(\mathbf{u}\) is \(-y\) and y-component of velocity (\(\mathbf{v}\) is \(x\). This configuration causes the flow to rotate counterclockwise around the origin.

We can visualize this vectorfield as follows:

Gravitational Field

Another example of a vectorfield is the gravitational field created due to a point mass. This can be furmulated as:

\[\mathbf{g}(x, y) = \left\langle -\frac{G \cdot m \cdot x}{r^2}, -\frac{G \cdot m \cdot y}{r^2} \right\rangle\]where \(G\) is the gravitational constant, and \(m\) is the mass. \({r^2}=x^2+y^2\) is the square of the distance from the mass.The field points towards the mass, with magnitude inversely proportional to the square of the distance.

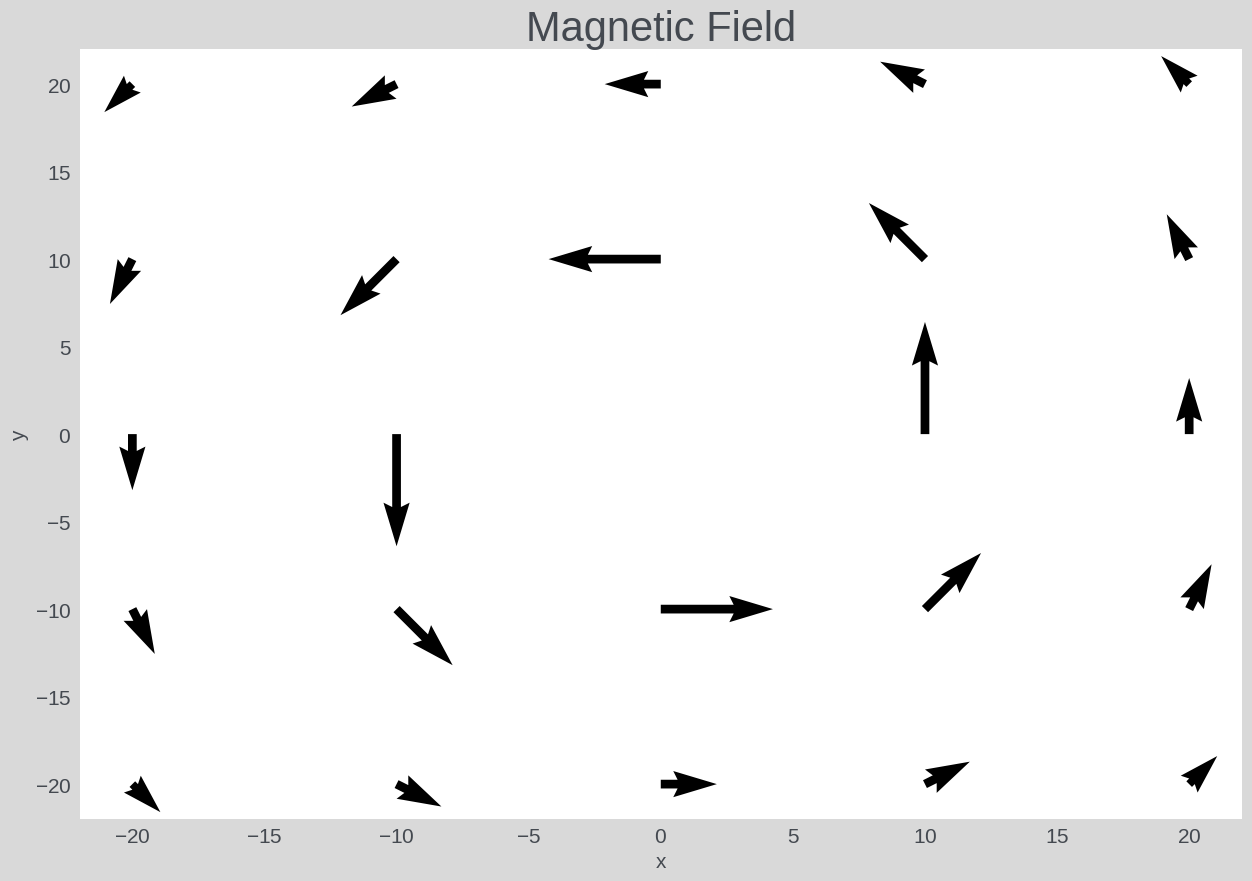

Magnetic Field

In this example, we use the magnetic field around a long, straight current-carrying wire (at the center), which can be written as:

\[\mathbf{B}(x, y) = \left\langle -\frac{\mu_0 \cdot I \cdot y}{r^2}, \frac{\mu_0 \cdot I \cdot x}{r^2} \right\rangle\]\(\mu_0\) is the magnetic constant, and \(I\) is the current. \({r^2}=x^2+y^2\) is the square of the distance from the wire that we assume is at the center of the plot. The field circles around the wire, with magnitude inversely proportional to the distance.

What is an ODE?

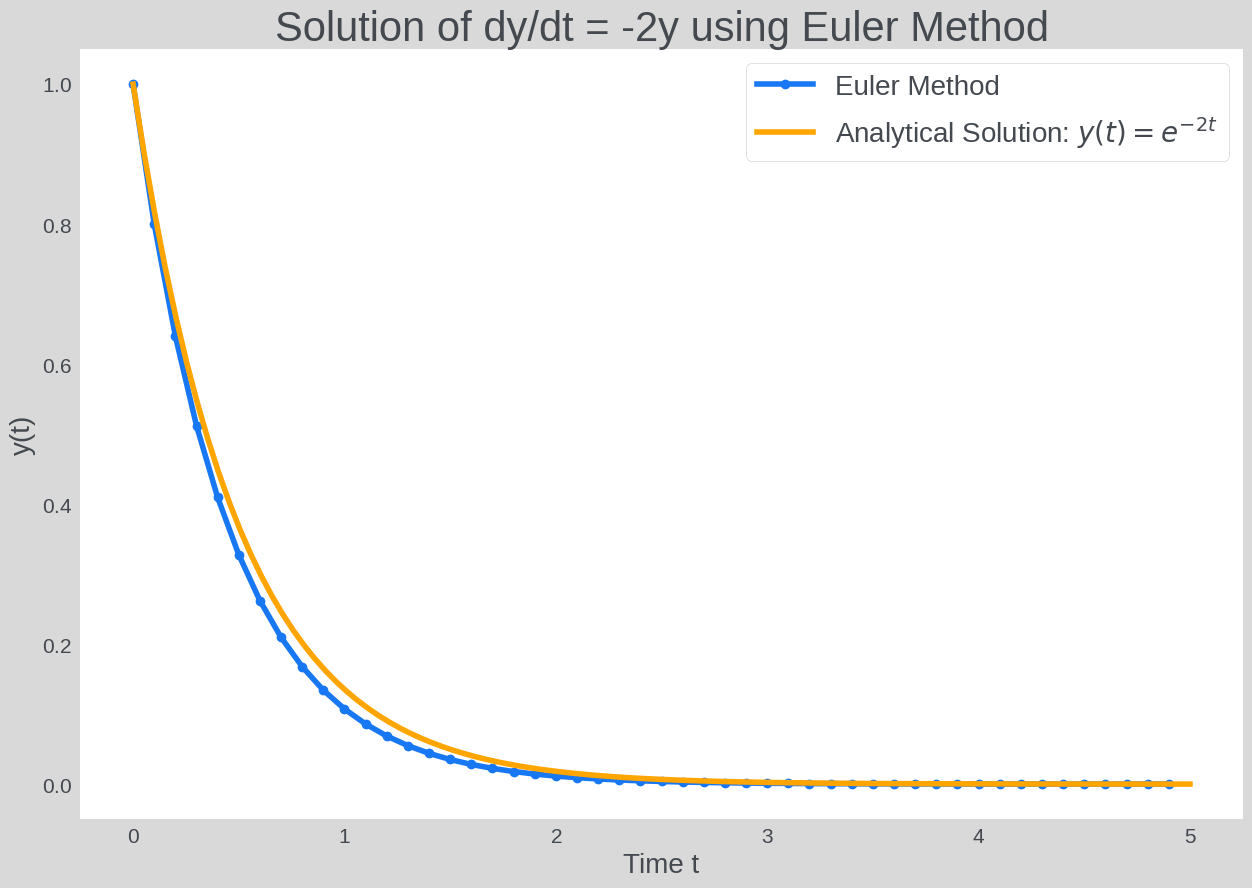

Let’s start by a simple example: assume that the direction and rate of change of function \(y\) w.r.t \(t\) is described as follows:

\[\frac{dy}{dt} = -2y\]In other words, this equation describes how the quantity $y$ changes over time (with $t$).

What is an ODE Solver?

The above ODE defines the relationship of the variable $y$ with time, and more specifically, how $y$ changes through time. Though it does not tell us the exact form of $y(t)$. In order to find $y(t)$ from the given ODE, we have to integrate the rate of change for $y$ in order to find the original function $y(t)$ that descibes the behaviour of $y$ over time.

If we start by the initial conditions of $y_0 = 1$ and $t_0 = 0$, and integrate for the range of 0 to 5 with step size of 0.1, we can follow the formula above and calculate every estimate for $y$ at the timesteps between 0 and 5.

Generative Modeling by leveraging Vectorfields

Now that we have a better understanding of vectorfields, what they are, and how they are represented and used, let’s focus on the main topic of this post, which is about generative modeling.

Vectorfields can be used for modeling generative models. In fact, there are many differet kinds of generative models that leverage vectorfields in their modeling

Recall from the previous sections that if a quantity is represented by an ODE (e.g., $\frac{dy}{dt}=f(t,n)$) that describes its changes through the range of a given variable (e.g., $t$), we can integrate through that ODE to estimate the function of that quantity.

What we have at hand for this approximation, is the changes of $y$ w.r.t $t$. Let’s now assume that, $t$ represents the level of noise (and not time) in $y$, which we can represent with the vectorfiled function $f$:

\[\frac{dy}{dt}=f\]By integrating through all possible values of $t$ (e.g, having all levels of noise $t$ for $y$), we can find $y = \int f(t) \, dt + C$ where $C$ is a constant. Therefore, using an ODE solver, and given the vectorfield function $f(t)$, we can have an approximation of $y$. If the function $y$ here would be the data generation function we are interested in, and by having a good approximation for $f$, we now have a receipt for a generative model.

How to estimate the vectorfield function for a generative model from data?

Let $x$ represent a sample from a data distribution $X$. Let also $t$ be a variable representing the amount of noise in $x$: if $t=0$ there is only noise, and if $t=1$ there is no noise. Given a noisy sample $x_n$ which has the noise level $t_n\in[0, 1]$, we would like to consider the following formulation:

\[x_{n+1} = x_n + h \cdot f(t_n, x_n)\]where $f(t_n, x_n)$ is a vectorfield function representing the rate and direction of change for $x_n$ into $x_{n+1}$. Given an initial condition of $t_0=0$ and $x_0 = z \sim \mathcal{N}(0, 1)$, we can use an ODE Solver such as Euler to find a denoised sample $x\simX$.

In other words, if we can manage to estimate this vectorfield, we will then have our generative model of $X$! We can actually parameterize $f$ using a neural network such that given any $t$ it can estimate the direction of change to arrive at the denoised sample $x$:

\[f_{\theta}(x_n, t_n) \approx x_1 - x_0, n=[0,\cdots, 1]\]We can actually train this parameterized model in a supervised fashion by creating a training set of $(x_\text{input},t, u_\text{output})$ where:

\[x_\text{input} = z + t * (x - z)\] \[u_\text{output}=x - z\]To create a diverse input, we can choose a point on a straight line between a real sample $x$ and a noise sample $z$, where $t$ defines the closeness of our sample to the real sample. After the training is finished, the model should be able to estimate the aforementioned vectorfield.

The vectorfield model is a neural network such as the following example, that can been trained supervised, with the $x_\text{input},t$ as input and $u_\text{output}$ as oputput (label), as denoted above.

import torch

import torch.nn as nn

class VFNet(nn.Module):

def __init__(self, nsteps=1):

super().__init__()

self.nsteps = nsteps

self.vf_model = nn.Sequential(

nn.Linear(3, 512),

nn.SiLU(),

nn.Linear(512, 512),

nn.SiLU(),

nn.Linear(512, 512),

nn.SiLU(),

nn.Linear(512, 512),

nn.SiLU(),

nn.Linear(512, 2),

)

self.mse_loss = nn.MSELoss()

def vf_func(self, t, x):

shape = x.shape

x = x.reshape(-1, 2)

t = t.reshape(-1, 1).expand(x.shape[0], 1)

tx = torch.cat([t, x], dim=-1)

return self.vf_model(tx)

def loss(self, batch):

bsz = batch.shape[0]

x0 = torch.randn(bsz, 2).to(batch.device)

t = torch.rand(bsz, 1).to(batch.device)

# a sample on the optimal transport path (straight line) between the noise (x0) and data (batch)

x_t = x0 + t * (batch - x0)

# ground-truth vectorfield

u_t = batch - x0

# predicted vectorfield

v_t = self.vf_func(t, x_t)

loss = self.mse_loss(v_t, u_t)

return loss

Sampling in Vectorfield-Based Generative models

After training $f_{\theta}$, we can initiate the sampling process via an ODE Solver such as Euler with the initial conditions of $t_1=0$ and $x_1=z \sim\mathcal{N}(0,1)$. Using this vectorfield, we can generate new samples, which we visualize in the following plot:

Choice of Prior Disribution

Often, the source distribution used in genrative models such as flow matching are chosen from simple distributions such as Gaussian. However, this is not an stricktly limiting factor and we can train the vectorfield network to learn from more complex distributions as well. To demonstrate this, we use a set of toy datasets, including some from the Datasaurous collection

You can see that, as long as the vectorfield model can properly learn to predict the vectorfield correctly, the generated samples are fairly good. Though this is only a small example and might not hold in extreme cases.

Reducing sampling steps

Usually, a flow matching model is trained by continousely sampling $t\in[0,1]$, and consequently using an ODE solver that relies on a continous $t$. But what if we fixed the solver steps before hand? E.g, Euler with $t\in T=\lbrace0,0.25,…,1 \rbrace$, and stepsize $h=\frac{1}{|T|}$?

| Although this may not generalize as well as an adaptive solver, as a proof of concept we have trained a model by only drawing $t$ from $T$, and used a fixed-step Euler $h=\frac{1}{ | T | }$ for sampling. Here is the result: |

It appears that the vectorfield function is still learning meaningfully and can transfer the source samples towards the target. Reducing sampling costs is an active area of research and similar ideas to the one above have been also explored in the literature